There comes a time in your career as a backend developer when you need to answer this question:

I need to build an asynchronous application using distributed queues, which broker can I use?

Let me stop you there!

Our natural instinct as engineers is to create a list of tools that we know or want to be familiar with (in case it is a new and known technology), and start using it.

Unfortunately, at that exact point in time, we have missed the first and most important question, which needs an answer before all others: What are our present and sometimes-future use cases/requirements, and what tool will best solve them?

This was the beginning of our story when it came to designing a major feature. Our instincts as engineers took over. Our first question was not the most important one, and from there, we found the process of selecting the right tool less effective.

Our team had a few meetings to discuss our need for a distributed queue, where different limitations/features of different technologies (from different paradigms) kept the focus away from our most important requirements, and from reaching a decision and consensus.

At that point, we decided to go back to basics and asked:

What is the use case we are trying to solve, and what are the areas in which we have no room for compromise?

As always, let’s start with the requirements.

Step 1: Fine tune the problem you are trying to solve and how the technology/tool architecture aligns with your goals and considerations

When choosing a message broker or event broker, there are many things to consider: high availability, fault tolerance, multi-tenancy, multiple cloud regions support, ability to support high throughput and low latency — and the list goes on and on.

Most of the time, when reading about the main features of either an event or message broker, we are presented with the most complex use cases, which most companies or products never fully utilize or need.

As engineers, as in life, there is a common saying that applies a lot of the time:

“God is in the details, but the devil is between the lines.”

When choosing between the two paradigms of event broker vs. message broker, the “devil” lies in more low-level technical considerations, such as:

message consumption or production acknowledgment methods, deduplication, prioritization of messages, consumer threading model, message consumption methods, message distribution/fanout support, poison pill handling, etc.

Oranges and apples (differences between concepts)

Step 2: Understand the differences between the two paradigms

Event broker

Stores a sequence of events. Events are usually appended to a log (queue or topic) in the order in which they arrived at the event broker. Events in the topic or queue are immutable and their order cannot be changed.

As events are published to the queue or topic, the broker identifies subscribers to the topic or queue, and makes the events available to multiple types of subscribers.

Producers and consumers need not be familiar with each other.

Events can potentially be stored for days or weeks, as once they are successfully consumed, they are not evicted from the queue/topic.

Message broker

Used for services or components to communicate with each other. It provides the exchange of information between applications by transmitting messages received from the producer to the consumer in an async manner.

It usually supports the concept of queue, where messages are typically stored for a short period of time. The purpose of the messages in the queue is to be consumed as soon as consumers are available for processing, and dropped after the successful consumption of said message.

Order of message processing in the queue is not guaranteed and can be altered.

Message broker vs. event broker

Normally, when dealing with a short-lived command or task-oriented processing, we would favor using a message broker.

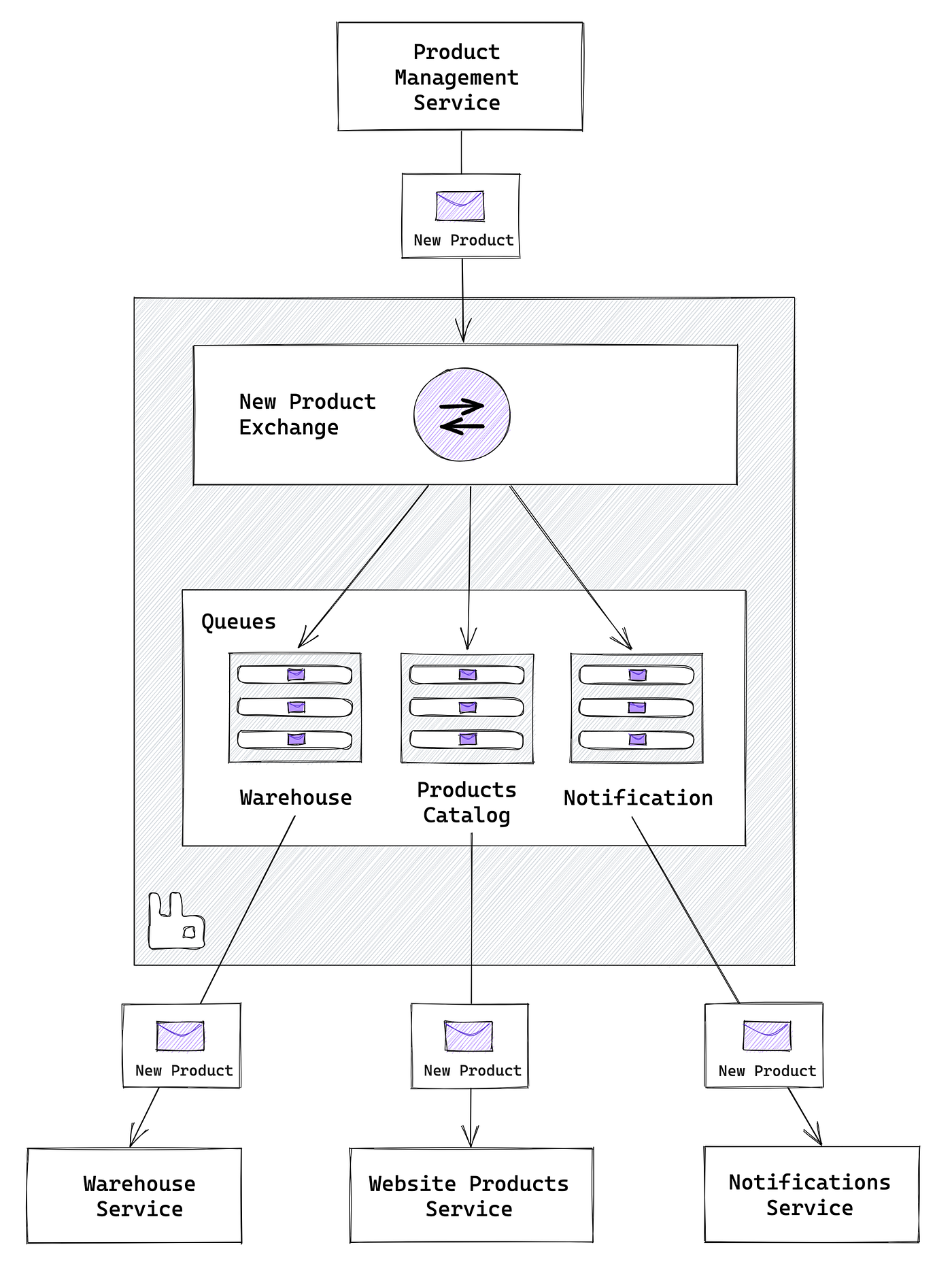

For example, let’s say you are working in an e-commerce company and want to add a new product to your company’s website. This could mean that multiple services need to be aware of it and process this request in an async manner.

The diagram above shows the use of RabbitMQ fanout message distribution, where each service has its own queue attached to a fanout exchange.

The products service sends a message to the exchange with the new product information, and in turn, the exchange sends the message to all the attached queues.

After a message is successfully consumed from a queue, it is deleted, as the services involved do not need to retain or reprocess the message again.

When dealing with current or historical events, usually in large volumes of data, which need to be processed in either a single or bulk manner, we would favor an event broker.

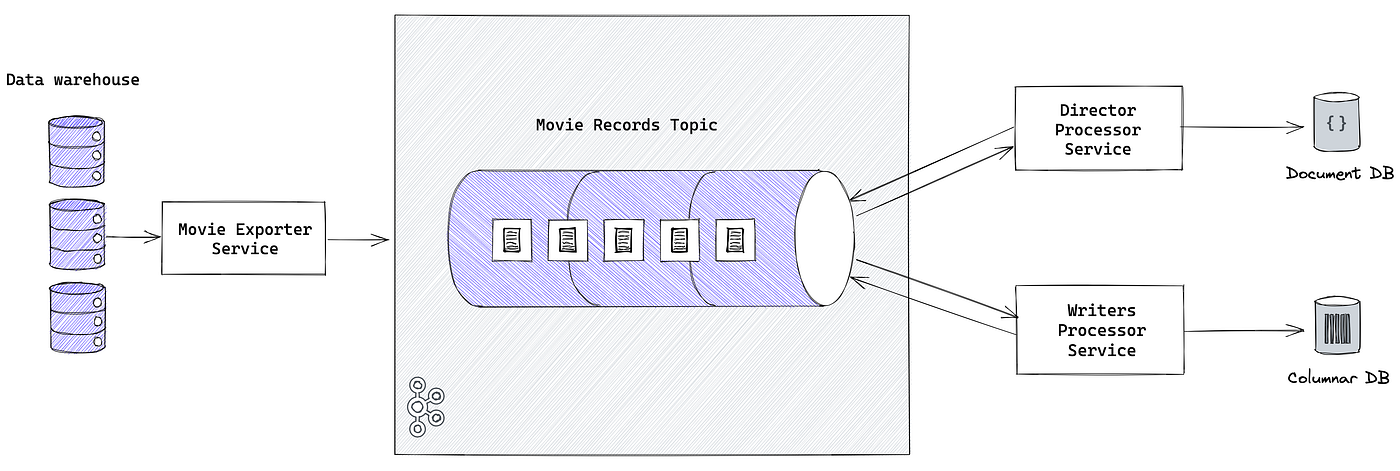

For example, let’s say you are working at an entertainment rating website, and you want to add a new feature to display movie writers and directors to your users. The information is historically stored but not accessible to the services in charge of providing this data.

The diagram above shows the use of Kafka as an event broker, allowing it to extract hundreds of millions of movies from the data warehouse in order to append the necessary information to movie information stored by each service.

Kafka can accept a massive amount of data in a relatively short period of time, and consumers can have a separate consumer group to process the movie’s topic stream separately.

Important aspects to be aware of

As I previously mentioned, there are a lot of things to consider when choosing the right paradigm for you.

I would like to discuss some key differences which often can make or break your decision about technology.

For this part, I will compare the two most popular technologies to date: Kafka (event broker) & RabbitMQ (message broker), each representing said paradigms, which I have a working experience with.

I strongly encourage you to take the following points into account in your technology selection process.

Poll vs. push

The way Kafka consumers work is by polling a bulk of messages in order from a topic, which is divided by partitions. Each consumer is assigned the responsibility of consuming from one or more partitions, where partitions are used as the parallelism mechanism for consumers (implicit threading model).

This means that the producer, who is usually in charge of managing the topic, is implicitly aware of the max number of consumer instances that can subscribe to the topic.

The consumer is responsible for handling both success and failure scenarios when processing messages. As messages are being polled in bulk from a partition, the message processing order is guaranteed at the partition level.

The way RabbitMQ consumers receive their messages from the queue is by the broker pushing messages to them.

Each message is processed in a singular atomic fashion, allowing for an explicit threading model by the consumer, without the awareness of the producer of the number of consumer instances.

Successful message processing is the responsibility of the consumer, whereas failure handling is done largely by the message broker.

Message distribution is managed by the broker.

Features such as delayed messages and message prioritization come out of the box, as message processing ordering is mostly not guaranteed by the queue.

Error handling

The way Kafka handles message processing errors is by delegating the responsibility to the consumer.

In case a message was processed a few times unsuccessfully (poison pill), the consumer application will need to keep track of the amount of processing attempts and then produce a message to a separate DLQ (dead letter queue) topic, where it can be examined/re-run later on.

For error handling purposes, the consumer is the one assigned all of the responsibility.

This means that in case you would like to have either retry/DLQ capabilities, it is up to you to provide a retry mechanism and also act as a producer when sending a message to a DLQ topic, which in some edge cases, might lead to message loss.

The way RabbitMQ handles message processing errors is by keeping track of failures in processing a message. After a message is considered a poison pill, it is routed to a DLQ exchange.

This allows for either requeueing of messages or routing to a dedicated DLQ for examination.

In this manner, RabbitMQ provides a guarantee that a message which was not processed successfully will not get lost.

Consumer acknowledgment and delivery guarantees

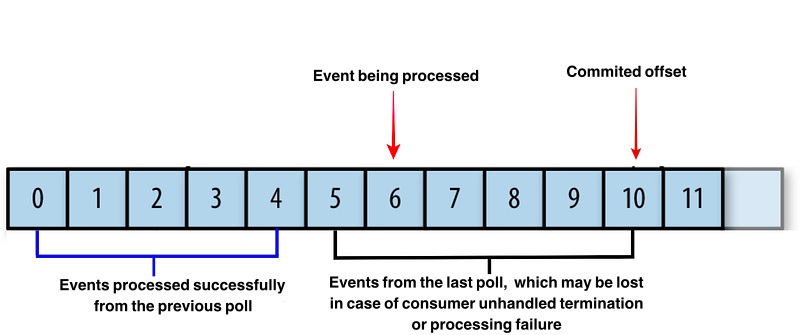

The way Kafka handles consumer acknowledgment is by the consumer committing messages offset belonging to the bulk of messages polled from the topics partition.

Out-of-the-box, the Kafka client commits the offset automatically, regardless if the message was processed successfully or not, which may lead to message loss, as shown in the image bellow.

This behavior can be changed by the consumer code taking the responsibility of committing the offsets of the fetched messages manually, including handling failures of messages consumption as well.

The way RabbitMQ handles consumer acknowledgment is by the consumer “acking” or “nacking” a message in an atomic per message manner, allowing for a retry policy / DLQ, if needed to be managed by the message broker.

Out-of-the-box RabbitMQ client acknowledgment is done automatically regardless if the message was processed successfully or not. The acknowledgment can be controlled manually by a configuration on the consumer side, allowing the message to be pushed again the to consumer for reprocessing in case of failures/ timeout.

Both RabbitMQ and Kafka provide, for most cases, at least once guarantee for message/event processing, which means the consumer should be idempotent in order to handle multiple processing of the same message/event.

Our process

Step 3: choose the technology by your use case and not the other way around

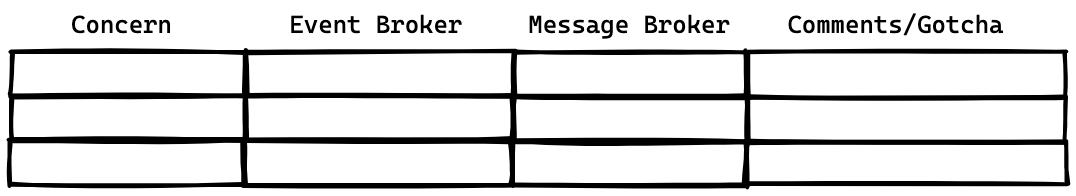

The most important part for us, was compiling a list of technical criteria for our solution, and assigning “no go” to requirements which we couldn’t live without as a team and as a product.

In the spirit of going back to basics, I used a plain old table to compile and compare the different criteria and also mentioned some “gotcha”s. Remember, “The devil is between the lines.”

This really helped organize and put a focus around what was critical for us and what we couldn’t live without.

For example, one of our “no go” requirements was that we couldn’t afford to lose messages in case there was an error in processing.

As you might remember from the section above, when using Kafka where a DLQ is needed, the consumer is also a DLQ producer. This means that in some cases of failures in the consumer, the message will not be sent to the DLQ topic, causing potential message loss.

At this point, as you might have guessed, we decided to go with the message broker.

Our feature consisted of a command/task-oriented processing use case, and the message broker met all of our product/data volume requirements, and also our team’s needs.

Final thoughts

The messaging and event streaming ecosystems consist of many solutions, each with dozens of different aspects that are important to consider and be familiar with.

It is vital that we enter each ecosystem with our eyes wide open, and have a clear understanding of these different paradigms. They will have a great effect on our day-to-day (and sometimes night) life as engineers.

In my next blog post, I will dive into the comparison table I created between the two paradigms, and deep dive into the more technical aspects of each one of them.

No comments:

Post a Comment